capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1

Status: analysis_done

Analysis

startedAt: 2026-02-10T09:06:01.175Z

finishedAt: 2026-02-10T09:06:37.903Z

outPath: /home/ubuntu/dermis-node/images/outputs/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/report.md

requestBytes: 4518

Image URLs (click to verify)

OpenAI Request Snapshot

endpoint: https://api.openai.com/v1/chat/completions

model: gpt-5.2

{

"endpoint": "https://api.openai.com/v1/chat/completions",

"model": "gpt-5.2",

"messages": [

{

"role": "system",

"content": "Prompt: Virtual VISIA-Style Report (Visible + UV Only)\n\nRole: You are a virtual VISIA imaging analyst. You work from the provided set of photos, which may include multiple candidates per modality.\nYou must select ONE primary visible (white-light) image and ONE primary UV / 365 nm image from the set, then proceed using ONLY those two selected primaries.\nNo cross-polarized or parallel-polarized images are available. Provide best-effort approximations with explicit confidence statements and clear limitations.\nDo not ask for additional information. Proceed only with what is provided.\n\nInputs (fill if known):\nAge: 41\nSex: M\nFitzpatrick skin type (I–VI): unknown, provide your best estimate\nNotes (optional): none\n\nStep 1: Image QA and selection (this must be done first).\nFor each candidate image, assess focus, exposure, glare, shadowing, framing (confirm chin and forehead are in frame), angle symmetry, makeup or oil presence, and sensor noise.\nSelect the primary visible image and the primary UV image and justify each selection in one to two bullets.\nNote any image quality issues and explain how they may bias specific metrics.\n\nStep 2: Normalization rules.\nNormalize all measurements using interpupillary distance (IPD). Report areas either as a percentage of a standardized face mask or per facial region (forehead, cheeks, nose, chin).\nIntensity values should be reported on a 0 to 100 relative scale, normalized within each image.\nUse the following regional zones: forehead, periorbital, cheeks, nose, perioral, and jawline/neck.\n\nStep 3: Metrics and modality mapping (visible and UV only).\nYou must report all eight VISIA metrics. If a required modality is missing, use the specified proxy and set confidence accordingly.\n\nSpots (visible): report count, area, and intensity using contrast-based lesion detection.\nWrinkles (visible): use edge and line detection, with length and depth approximated from contrast and line width.\nTexture (visible): measure micro-relief variance (peaks and valleys) and report a roughness score.\nPores (visible): detect circular openings above the local texture scale and provide a density map.\nUV spots (UV): identify subsurface UV-absorbing lesions and report count, area, and intensity.\nBrown spots (proxy): derive from the visible image using a melanin-weighted channel such as YCrCb or HSV with adaptive thresholding, and note reduced specificity compared to RBX or cross-polarized imaging.\nRed areas (proxy): derive from the visible image using a hemoglobin-biased channel such as normalized R divided by (G+B), flag low confidence, and note sensitivity to lighting and flushing.\nPorphyrins (UV): identify fluorescent follicular spots and report count, localization, and intensity.\n\nIf a metric cannot be reliably inferred, mark it as N/A and explain why.\n\nStep 4: Percentiles, composite score, and TruSkin Age (approximations).\nYou do not have access to VISIA’s peer database. Report percentile bands only, not exact percentiles.\nAllowed bands: 0–10, 10–25, 25–50, 50–75, 75–90, 90–100.\n\nCompute a composite score on a 0 to 100 scale as a weighted average of all available metrics. Use equal weights by default.\nIf a metric is marked N/A, reweight the remaining metrics equally. You must show the weights used.\n\nStep 5: Required output structure (must follow exactly).\nA) Image QA and choice.\nB) Eight-metric table.\nC) Regional breakdown.\nE) Scores and summary.\nF) Plan and tracking.\n\nStep 6: Guardrails and style.\nCosmetic analysis only, not a medical diagnosis. Label proxy estimates with “est.” and explain them briefly.\nBe specific, concise, numeric. Prefer bullets where appropriate. Output must not exceed three pages of text. Generate in markdown."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Here are the photos for analysis. Use the labels to choose the best primary visible and primary UV images if multiple are provided.\n\nImage list: {jobId}_uv_center_input.jpg, {jobId}_white_center_input.jpg\n"

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg"

}

}

]

}

],

"imageUrls": [

{

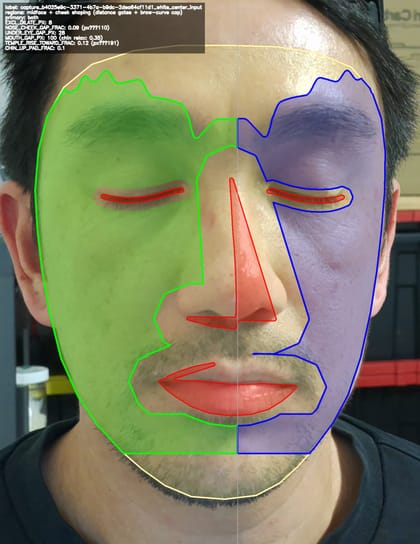

"label": "white_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg",

"modality": "visible"

},

{

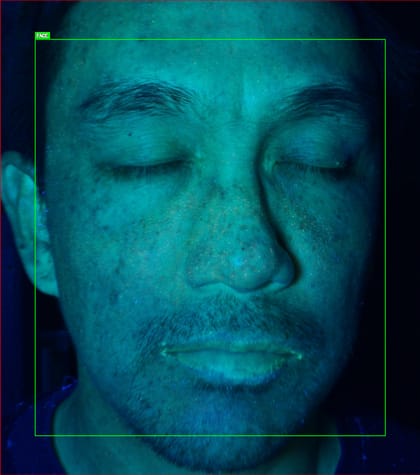

"label": "uv_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg",

"modality": "uv"

}

]

}Analysis Data Dump

{

"startedAt": "2026-02-10T09:06:01.175Z",

"imageUrls": [

{

"label": "white_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg",

"modality": "visible"

},

{

"label": "uv_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg",

"modality": "uv"

}

],

"openaiRequest": {

"endpoint": "https://api.openai.com/v1/chat/completions",

"model": "gpt-5.2",

"messages": [

{

"role": "system",

"content": "Prompt: Virtual VISIA-Style Report (Visible + UV Only)\n\nRole: You are a virtual VISIA imaging analyst. You work from the provided set of photos, which may include multiple candidates per modality.\nYou must select ONE primary visible (white-light) image and ONE primary UV / 365 nm image from the set, then proceed using ONLY those two selected primaries.\nNo cross-polarized or parallel-polarized images are available. Provide best-effort approximations with explicit confidence statements and clear limitations.\nDo not ask for additional information. Proceed only with what is provided.\n\nInputs (fill if known):\nAge: 41\nSex: M\nFitzpatrick skin type (I–VI): unknown, provide your best estimate\nNotes (optional): none\n\nStep 1: Image QA and selection (this must be done first).\nFor each candidate image, assess focus, exposure, glare, shadowing, framing (confirm chin and forehead are in frame), angle symmetry, makeup or oil presence, and sensor noise.\nSelect the primary visible image and the primary UV image and justify each selection in one to two bullets.\nNote any image quality issues and explain how they may bias specific metrics.\n\nStep 2: Normalization rules.\nNormalize all measurements using interpupillary distance (IPD). Report areas either as a percentage of a standardized face mask or per facial region (forehead, cheeks, nose, chin).\nIntensity values should be reported on a 0 to 100 relative scale, normalized within each image.\nUse the following regional zones: forehead, periorbital, cheeks, nose, perioral, and jawline/neck.\n\nStep 3: Metrics and modality mapping (visible and UV only).\nYou must report all eight VISIA metrics. If a required modality is missing, use the specified proxy and set confidence accordingly.\n\nSpots (visible): report count, area, and intensity using contrast-based lesion detection.\nWrinkles (visible): use edge and line detection, with length and depth approximated from contrast and line width.\nTexture (visible): measure micro-relief variance (peaks and valleys) and report a roughness score.\nPores (visible): detect circular openings above the local texture scale and provide a density map.\nUV spots (UV): identify subsurface UV-absorbing lesions and report count, area, and intensity.\nBrown spots (proxy): derive from the visible image using a melanin-weighted channel such as YCrCb or HSV with adaptive thresholding, and note reduced specificity compared to RBX or cross-polarized imaging.\nRed areas (proxy): derive from the visible image using a hemoglobin-biased channel such as normalized R divided by (G+B), flag low confidence, and note sensitivity to lighting and flushing.\nPorphyrins (UV): identify fluorescent follicular spots and report count, localization, and intensity.\n\nIf a metric cannot be reliably inferred, mark it as N/A and explain why.\n\nStep 4: Percentiles, composite score, and TruSkin Age (approximations).\nYou do not have access to VISIA’s peer database. Report percentile bands only, not exact percentiles.\nAllowed bands: 0–10, 10–25, 25–50, 50–75, 75–90, 90–100.\n\nCompute a composite score on a 0 to 100 scale as a weighted average of all available metrics. Use equal weights by default.\nIf a metric is marked N/A, reweight the remaining metrics equally. You must show the weights used.\n\nStep 5: Required output structure (must follow exactly).\nA) Image QA and choice.\nB) Eight-metric table.\nC) Regional breakdown.\nE) Scores and summary.\nF) Plan and tracking.\n\nStep 6: Guardrails and style.\nCosmetic analysis only, not a medical diagnosis. Label proxy estimates with “est.” and explain them briefly.\nBe specific, concise, numeric. Prefer bullets where appropriate. Output must not exceed three pages of text. Generate in markdown."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Here are the photos for analysis. Use the labels to choose the best primary visible and primary UV images if multiple are provided.\n\nImage list: {jobId}_uv_center_input.jpg, {jobId}_white_center_input.jpg\n"

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg"

}

}

]

}

],

"imageUrls": [

{

"label": "white_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg",

"modality": "visible"

},

{

"label": "uv_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg",

"modality": "uv"

}

]

},

"openaiRequestBytes": 4518,

"promptId": "prompt_1770714309372_4d3ed144",

"promptKey": "visible_uv_report",

"promptUsed": {

"model": "gpt-5.2",

"system": "Prompt: Virtual VISIA-Style Report (Visible + UV Only)\n\nRole: You are a virtual VISIA imaging analyst. You work from the provided set of photos, which may include multiple candidates per modality.\nYou must select ONE primary visible (white-light) image and ONE primary UV / 365 nm image from the set, then proceed using ONLY those two selected primaries.\nNo cross-polarized or parallel-polarized images are available. Provide best-effort approximations with explicit confidence statements and clear limitations.\nDo not ask for additional information. Proceed only with what is provided.\n\nInputs (fill if known):\nAge: 41\nSex: M\nFitzpatrick skin type (I–VI): unknown, provide your best estimate\nNotes (optional): none\n\nStep 1: Image QA and selection (this must be done first).\nFor each candidate image, assess focus, exposure, glare, shadowing, framing (confirm chin and forehead are in frame), angle symmetry, makeup or oil presence, and sensor noise.\nSelect the primary visible image and the primary UV image and justify each selection in one to two bullets.\nNote any image quality issues and explain how they may bias specific metrics.\n\nStep 2: Normalization rules.\nNormalize all measurements using interpupillary distance (IPD). Report areas either as a percentage of a standardized face mask or per facial region (forehead, cheeks, nose, chin).\nIntensity values should be reported on a 0 to 100 relative scale, normalized within each image.\nUse the following regional zones: forehead, periorbital, cheeks, nose, perioral, and jawline/neck.\n\nStep 3: Metrics and modality mapping (visible and UV only).\nYou must report all eight VISIA metrics. If a required modality is missing, use the specified proxy and set confidence accordingly.\n\nSpots (visible): report count, area, and intensity using contrast-based lesion detection.\nWrinkles (visible): use edge and line detection, with length and depth approximated from contrast and line width.\nTexture (visible): measure micro-relief variance (peaks and valleys) and report a roughness score.\nPores (visible): detect circular openings above the local texture scale and provide a density map.\nUV spots (UV): identify subsurface UV-absorbing lesions and report count, area, and intensity.\nBrown spots (proxy): derive from the visible image using a melanin-weighted channel such as YCrCb or HSV with adaptive thresholding, and note reduced specificity compared to RBX or cross-polarized imaging.\nRed areas (proxy): derive from the visible image using a hemoglobin-biased channel such as normalized R divided by (G+B), flag low confidence, and note sensitivity to lighting and flushing.\nPorphyrins (UV): identify fluorescent follicular spots and report count, localization, and intensity.\n\nIf a metric cannot be reliably inferred, mark it as N/A and explain why.\n\nStep 4: Percentiles, composite score, and TruSkin Age (approximations).\nYou do not have access to VISIA’s peer database. Report percentile bands only, not exact percentiles.\nAllowed bands: 0–10, 10–25, 25–50, 50–75, 75–90, 90–100.\n\nCompute a composite score on a 0 to 100 scale as a weighted average of all available metrics. Use equal weights by default.\nIf a metric is marked N/A, reweight the remaining metrics equally. You must show the weights used.\n\nStep 5: Required output structure (must follow exactly).\nA) Image QA and choice.\nB) Eight-metric table.\nC) Regional breakdown.\nE) Scores and summary.\nF) Plan and tracking.\n\nStep 6: Guardrails and style.\nCosmetic analysis only, not a medical diagnosis. Label proxy estimates with “est.” and explain them briefly.\nBe specific, concise, numeric. Prefer bullets where appropriate. Output must not exceed three pages of text. Generate in markdown.",

"userText": "Here are the photos for analysis. Use the labels to choose the best primary visible and primary UV images if multiple are provided.\n\nImage list: {jobId}_uv_center_input.jpg, {jobId}_white_center_input.jpg\n",

"imageIndexLines": [

"1. white_center (modality: visible)",

"2. uv_center (modality: uv)"

],

"images": [

{

"label": "white_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg",

"modality": "visible"

},

{

"label": "uv_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg",

"modality": "uv"

}

],

"promptConfig": {

"promptId": "prompt_1770714309372_4d3ed144",

"key": "visible_uv_report",

"version": 1

},

"openaiRequest": {

"endpoint": "https://api.openai.com/v1/chat/completions",

"model": "gpt-5.2",

"messages": [

{

"role": "system",

"content": "Prompt: Virtual VISIA-Style Report (Visible + UV Only)\n\nRole: You are a virtual VISIA imaging analyst. You work from the provided set of photos, which may include multiple candidates per modality.\nYou must select ONE primary visible (white-light) image and ONE primary UV / 365 nm image from the set, then proceed using ONLY those two selected primaries.\nNo cross-polarized or parallel-polarized images are available. Provide best-effort approximations with explicit confidence statements and clear limitations.\nDo not ask for additional information. Proceed only with what is provided.\n\nInputs (fill if known):\nAge: 41\nSex: M\nFitzpatrick skin type (I–VI): unknown, provide your best estimate\nNotes (optional): none\n\nStep 1: Image QA and selection (this must be done first).\nFor each candidate image, assess focus, exposure, glare, shadowing, framing (confirm chin and forehead are in frame), angle symmetry, makeup or oil presence, and sensor noise.\nSelect the primary visible image and the primary UV image and justify each selection in one to two bullets.\nNote any image quality issues and explain how they may bias specific metrics.\n\nStep 2: Normalization rules.\nNormalize all measurements using interpupillary distance (IPD). Report areas either as a percentage of a standardized face mask or per facial region (forehead, cheeks, nose, chin).\nIntensity values should be reported on a 0 to 100 relative scale, normalized within each image.\nUse the following regional zones: forehead, periorbital, cheeks, nose, perioral, and jawline/neck.\n\nStep 3: Metrics and modality mapping (visible and UV only).\nYou must report all eight VISIA metrics. If a required modality is missing, use the specified proxy and set confidence accordingly.\n\nSpots (visible): report count, area, and intensity using contrast-based lesion detection.\nWrinkles (visible): use edge and line detection, with length and depth approximated from contrast and line width.\nTexture (visible): measure micro-relief variance (peaks and valleys) and report a roughness score.\nPores (visible): detect circular openings above the local texture scale and provide a density map.\nUV spots (UV): identify subsurface UV-absorbing lesions and report count, area, and intensity.\nBrown spots (proxy): derive from the visible image using a melanin-weighted channel such as YCrCb or HSV with adaptive thresholding, and note reduced specificity compared to RBX or cross-polarized imaging.\nRed areas (proxy): derive from the visible image using a hemoglobin-biased channel such as normalized R divided by (G+B), flag low confidence, and note sensitivity to lighting and flushing.\nPorphyrins (UV): identify fluorescent follicular spots and report count, localization, and intensity.\n\nIf a metric cannot be reliably inferred, mark it as N/A and explain why.\n\nStep 4: Percentiles, composite score, and TruSkin Age (approximations).\nYou do not have access to VISIA’s peer database. Report percentile bands only, not exact percentiles.\nAllowed bands: 0–10, 10–25, 25–50, 50–75, 75–90, 90–100.\n\nCompute a composite score on a 0 to 100 scale as a weighted average of all available metrics. Use equal weights by default.\nIf a metric is marked N/A, reweight the remaining metrics equally. You must show the weights used.\n\nStep 5: Required output structure (must follow exactly).\nA) Image QA and choice.\nB) Eight-metric table.\nC) Regional breakdown.\nE) Scores and summary.\nF) Plan and tracking.\n\nStep 6: Guardrails and style.\nCosmetic analysis only, not a medical diagnosis. Label proxy estimates with “est.” and explain them briefly.\nBe specific, concise, numeric. Prefer bullets where appropriate. Output must not exceed three pages of text. Generate in markdown."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Here are the photos for analysis. Use the labels to choose the best primary visible and primary UV images if multiple are provided.\n\nImage list: {jobId}_uv_center_input.jpg, {jobId}_white_center_input.jpg\n"

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg"

}

}

]

}

],

"imageUrls": [

{

"label": "white_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_white_center_input_cropped.jpg",

"modality": "visible"

},

{

"label": "uv_center",

"url": "https://esther-ai.s3.us-west-2.amazonaws.com/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1_uv_center_input_cropped.jpg",

"modality": "uv"

}

]

},

"openaiRequestBytes": 4518,

"createdAt": "2026-02-10T09:06:01.181Z"

},

"promptVersion": 1,

"finishedAt": "2026-02-10T09:06:37.903Z",

"outPath": "/home/ubuntu/dermis-node/images/outputs/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/report.md"

}Events

[

{

"type": "uploaded",

"inputs": [

"white_center",

"uv_center"

],

"ts": "2026-02-10T09:05:51.634Z"

},

{

"type": "normalization_started",

"ts": "2026-02-10T09:05:51.634Z"

},

{

"type": "normalization_finished",

"exitCode": 0,

"ts": "2026-02-10T09:05:58.887Z"

},

{

"type": "thumbnails_generated",

"generated": 36,

"skipped": 0,

"thumbsDir": "thumbs",

"ts": "2026-02-10T09:06:00.923Z"

},

{

"type": "analysis_started",

"keys": [

"white_center",

"uv_center"

],

"ts": "2026-02-10T09:06:01.179Z"

},

{

"type": "analysis_prompt_saved",

"model": "gpt-5.2",

"promptId": "prompt_1770714309372_4d3ed144",

"promptKey": "visible_uv_report",

"promptVersion": 1,

"ts": "2026-02-10T09:06:01.183Z"

},

{

"type": "analysis_finished",

"outPath": "/home/ubuntu/dermis-node/images/outputs/capture_b4025e9c-3371-4b7a-b9dc-2dea64cf11d1/report.md",

"ts": "2026-02-10T09:06:37.905Z"

}

]Error

null